As a Product Owner (PO), I can’t stand it when my team doesn’t deliver the increment (in its entirety!) that they promised at the end of the sprint. This is because I spend time with my customers to find out what they want. Then I design user features that I turn into work packages. I then prioritise, refine and schedule them with the team. We even make an agreement together before starting a new sprint. After all this, when my team doesn’t deliver everything they committed to, I have the unpleasant feeling that all this preparation work was a wasted effort. All that SCRUM overhead for nothing! I feel like Rigor Mortis in Yes, Dark Lord:

“We did not see this problem coming”.

“It took longer than expected”.

”- The library is poorly documented”.

”- Our reference system was down” - “We didn’t understand it that way

” - We didn’t understand it that way “

”- Someone in the team was sick for three days”,

would say, for example, my teams of goblins to creatively justify why they don’t deliver on specification at the end of the sprint. Because they failed, some features have to be postponed to a later sprint, therefore they are not delivered on time. Sometimes, bugs would be discovered during software demonstrations to our customers. Suddenly, the software just doesn’t work, “for no reason”, and the goblins invoke the “demo effect”. In the end, Rigor Mortis has no other choice than doling out the withering look.

Of course, I can also, as a PO, be responsible for failure. Sometimes I guided my team of elves to the wrong features. We all know the caricature of project management as a seesaw:

It is quite easy for an OP to misunderstand the needs of the customers and even when he/she understands them correctly, the OP can fail to communicate what he/she wants to the team. Similarly, when the PO uses inappropriate means to monitor the team’s progress, he or she is entirely responsible for the failure. Talking to team members (e.g. in daily meetings) is not enough to get an overview of what is going on. On the contrary, what the PO needs are numbers to assess progress. As Lord Kelvin said, “when you can measure what you are talking about and express it in numbers, you know something about it; but when you cannot measure it, when you cannot express it in numbers, your knowledge is meagre and unsatisfactory”. In software development, “working software is the primary measure of progress”, as stated in the 7th agile principle. Therefore, we need to find a way to connect what our customer wants with what our team is developing in a measurable way, and this is precisely what I would like to address now by presenting … a free communication tool.

It is time to escape the hell of rigor mortis.

Let’s close the agile parenthesis for now and focus on the real problems. Let’s say a customer comes in and asks:

”- hey, I would like you to add a login feature to my platform”

We have two problems. On the one hand, we have to determine exactly what they want. On the other hand, we have to make sure that our software team understands exactly what we want and that they figure out themselves how to achieve it in an efficient and reliable way.

From the client to the “three amigos”.

Depending on the client, the functionality can be summarised by the following user story:

> As a shop manager, > > I want to connect to the management dashboard, > > so that I can access my shop’s sensitive data.

But, well, there are many aspects to this user story. For a start, the customer has to decide what kind of credentials they want to use. A username/password combination? Can the username be an email? Do they want to enablemulti-factor authentication (MFA)? Also, do they accept any password or do they enforce a password policy? Also, how long should a login remain valid? Is a “remember me” function required? Finally, what should this function look like visually?

A discussion with the customer could lead to the following specification of the Gherkin:

Feature: Manager can log on the management dashboard

As a shop manager,

I want to log on the management dashboard,

so that I can access my shop's sensitive data.

Scenario: The manager provides valid credentials on the admin application

Given a registered manager

When she logs on the admin application with valid email and password

Then she is granted access to her dashboard

Scenario: The manager provides wrong credentials

Given a registered manager

When she logs on the admin application with invalid credentials

Then she gets unauthorized access

--

Feature: Password policy

As a user,

I need to be encouraged to employ strong passwords,

for the sake of security.

Scenario Outline: The password is invalid

A password complies to the password policy if, and only if,

it satisfies the following criteria:

- contains at least 8 characters

- mixes alpha-numerical characters

- contains at least one special character

Given the password <non-compliant password=""/>

Then it is not compliant

Examples:

| non-compliant password |

| 1234 |

| 1l0v3y0u |

| ufiDo_anCyx |

| blabli 89qw lala hI |

This is a summary of our discussion with the client. We put ourselves in the situation of a manager using the system and proposed use cases or scenarios. Note that the above specification is written in Gherkin syntax but you can choose the syntax of your choice. For me, I like to use Gherkin syntax, which is supported by many programming languages, or gauge markdown syntax, which is supported by fewer programming languages (C#, Java, Javascript, Python and Ruby, at the time of writing this article). You can find more information on these two possibilities here, as well as on other choices.

In addition to the above specification, the client gives us carte blanche for the visual aspects. That’s it for the high-level use cases. In essence, they don’t want MFA or a “remember me” feature. Instead, they want to keep it simple and stupid, with basic email/password authentication and a simple password policy.

But the story doesn’t end there. The functionality needs to be implemented, and this is where we get the “three amigos” involved. While it’s nice to have clearly defined what the customer wants, there may be a lack of “what if” scenarios. For example, our developers and testers might find other non-compliant passwords. What’s more, the high-level features above certainly don’t cover the entire login function. For example, what does it mean to be logged in? How can we validate the connection? The team may choose (but is not obliged) to opt for paseto or JWT authentication, for which the following more technical feature could be designed:

Feature: Authenticated users validate their JWTs

As a user,

I want to validate my JWT,

so that I can infer if my session is still valid.

Scenario: A valid JWT validates

Given a registered user

And the user has logged in with valid credentials

When she validates her JWT

Then she gets her user id

Scenario: An expired JWT does not validate

Given a registered user

When she validates an expired JWT

Then she gets error message

"""

Could not verify JWT: JWT expired

"""

Scenario: A JWT with inconsistent payload does not validate

Given a registered user

And the user has logged in with valid credentials

When she modifies the JWT payload

And validates the JWT

Then she gets error message

"""

Could not verify JWT: JWT error

"""

Scenario Outline: A non-JWT does not validate

Given the non-JWT "<non-jwt>"

When the user validates the JWT

Then she gets error message

"""

<error message=""/>

"""

Examples:

| description | non-jwt | error message |

| random string | my-invalid-jwt | Could not verify JWT: not a jwt |

| empty string | | Could not verify JWT: empty token |

Note in passing how our initial user story corresponds to three different Gherkin features. There is no correspondence between user stories and Gherkin features, except perhaps in the initial phase of a project. User stories are a planning tool whereas Gherkin features are a communication tool. They do not live on the same layer.

We can imagine all sorts of agile / v-model / other processes to discuss the updated specification with the customer or stakeholders and increase the clarity of the functionality. This is high level business communication about what the functionality should look like.

Nevertheless, although we can say that we’ve clarified what needs to be done, it’s still difficult to estimate when the connection function will be delivered, as we haven’t yet thought about how to achieve it. To establish this estimate, let’s implement the above tests. Indeed, we now have text files containing the way the feature is supposed to behave. Why not write code that emulates a manager trying to connect to his dashboard? When the development team does this, they’ll discover the interfaces, objects and high-level APIs they need to interact with, which will help define the overall architecture of our login functionality. As Uncle Bob writes in his book Agile Software Development, Principles, Patterns, and Practices, “starting by writing acceptance tests has a profound effect on the architecture of the system” (Chapter 4, “Testing”). In a way, this is the same kind of experience as that of Uncle Bob and Robert S. Koss in The Bowling Game: An example of test-first pair programming, except that this experience focuses on unit testing, i.e. on implementation details, rather than on the overall architecture of the system. Depending on the structure of your organization, the entire team, architects or team leaders will write these tests in preparation for planning. In doing so, they’re bound to come across some surprises, and may even challenge the functionality, in order to gain the best knowledge on the subject. If we can avoid a planning “poker game” (or equivalent), we greatly reduce the risk of misleading our stakeholders. The more information the team obtains about the implementation, the less risky the poker will be.

Let’s take a simple scenario as an example to illustrate how the specification could be linked to the test code. Suppose we want to implement

> Scenario: A valid JWT

>

> Given a registered user

> And the user has logged in with valid credentials

> When he validates his JWT

> he gets his user ID

A possible implementation in python could look like this (for example, with the behave library):

@given(u'a registered user')

def step_impl(context):

# the auth_fixtures contain registered user data

context.current_user = context.auth_fixtures[0]

@given(u'the user has logged in with valid credentials')

def step_impl(context):

user = User(context)

# the User class abstracts out communication with the api through a client

context.current_jwt, _ = user.login(

context.current_user['email'], context.current_user['password'])

@when(u'she validates her JWT')

def step_impl(context):

token = context.current_jwt

# this method abstracts out communication with the api through a client

# it makes a context.response_payload available, which will be used in the "then" step

validate_token(context, token)

@then(u'she gets her user id')

def step_impl(context):

assert context.response_payload['userId'] == context.current_user['id']

This code is the result of the team’s architectural debates. Team members find compromises, research technologies and make spikes, until they arrive at a complete set of implemented Gherkin steps that trigger the most pragmatic, minimalist and cost-effective implementation of the desired functionality.

The python code snippet above uses the software that your team will develop. Before the start of a development iteration, the scenarios do not run, due to the lack of implementation. As time goes on, more and more of these Gherkin scenarios provide success feedback. When the team is done with the implementation, this code works perfectly and provides living documentation of what is happening in the software. This documentation is very powerful. Each feature can be provided with architectural decisions, sketches and a direct link to productive code execution.

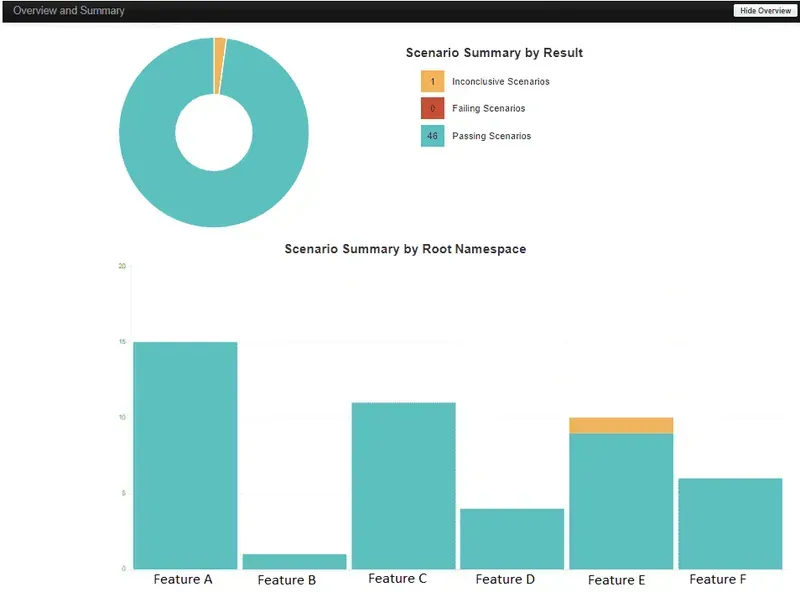

We now have a specification and its underlying tests. Our team knows what to do and how to do it. When they run those tests, they know how far they have come towards their goal. Planning/tasking is easy because functionality has been refined and highlighted. If the development team implements continuous integration, Rigor Mortis can even get live feedback on development progress. When his sprites are on task, he can monitor their activities and get a better idea of what they are doing and how they are doing it, for example through a dashboard like the following, which would be updated with each sprite push to the main branch of the repository:

These are precisely the numbers we talked about earlier in this post. The dashboard clearly shows how many scenarios are successful, failed or inconclusive. We can put numbers on the progress of development. We can measure how much working software the team has produced so far. From the results described by this particular dashboard, we can deduce that the team is soon ready to deliver the user stories it has committed to deliver.

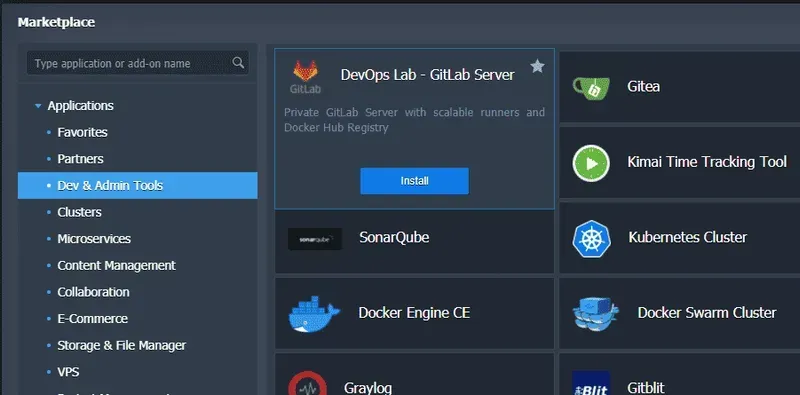

It is quite easy to set up this reporting and documentation live. For example, with the Gitlab server of the Jelastic marketplace on Hidora,

or with Hidora’s remarkable Gitlab as a Service, you can define pipeline tasks on your repository that perform the Gherkin tests, package their results in an xml file, generate the pickles report and publish it somehow. Assuming you have a docker image that can be extracted from a docker registry, for example

FROM mono:latest AS unpacker

ARG RELEASE_VERSION=2.20.1

ADD https://github.com/picklesdoc/pickles/releases/download/v${RELEASE_VERSION}/Pickles-exe-${RELEASE_VERSION}.zip /pickles.zip

RUN apt-get update \

&& apt-get install unzip \

&& mkdir /pickles \

&& unzip /pickles.zip -d /pickles

FROM mono:latest

COPY --from=unpacker /pickles /pickles

you can for example complete your gitlab pipelines like this in .gitlab-ci.yaml for a SpecFlow acceptance test project:

stages:

- build

- test

- deploy

- review

- publish-pickles-reports

[...]

# here you deploy e.g. your staging environment

# the scripts are kept to their minimum for the sake of readability

# usually, you would want to ensure that your environment is in stopped state

# and you would also want to wait until all k8s deployments / jobs have been deployed

deploy:

stage: deploy

image: my-docker-registry/devspace:latest

variables:

<your deployment="" variables=""/>

environment:

name: $YOUR_STAGING_ENV_NAME

# we deploy to our staging domain

url: $URL_TO_YOUR_STAGING_ENVIRONMENT

before_script:

- docker login -u $CI_REGISTRY_USER -p $CI_REGISTRY_PASSWORD $CI_REGISTRY

script:

- devspace deploy --build-sequential -n $KUBE_NAMESPACE -p staging

only:

- master

# here you perform your acceptance tests on the deployed system

acceptance-test:

stage: review

image: mcr.microsoft.com/dotnet/sdk:5.0-alpine-amd64

variables:

<your acceptance="" test="" variables=""/>

script:

# this command assumes you have JunitXml.TestLogger and NunitXml.TestLogger installed in your .net core / .net 5 project

- |

dotnet test ./features/Features.csproj --logger:"nunit;LogFilePath=.\features\nunit-test-reports\test-result.xml" \

--logger:"junit;LogFilePath=.\features\junit-test-reports\test-result.xml;MethodFormat=Class;FailureBodyFormat=Verbose"

artifacts:

reports:

# gitlab does not understand nunit, we need junit test data here

# for the gitlab test reporting

junit:

- ./features/junit-test-reports/*.xml

paths:

- ./features/junit-test-reports/*.xml

# the following xml files will be used in the pages job

- ./features/nunit-test-reports/*.xml

only:

- master

[...]

# here you publish your living documentation with the test results

pages:

stage: publish-pickles-reports

image: my-docker-registry/pickles:latest

dependencies:

- acceptance-test

script:

# this command generates a pickles documentation with test results

# in the ./public folder, which is the source folder for the gitlab

# pages

- |

mono /pickles/Pickles.exe --feature-directory=./features \

--output-directory=./public \

--system-under-test-name=my-test-app \

--system-under-test-version=$CI_COMMIT_SHORT_SHA \

--language=en \

--documentation-format=dhtml \

--link-results-file=./features/nunit-test-reports/test-result.xml \

--test-results-format=nunit3

artifacts:

paths:

- public

expires_in: 30 days

only:

- master

The pickles report, as well as the dynamic html pickles specification, can be published on a gitlab page as in the excerpt above or a docker container which can then be deployed to your kubernetes cluster, Jelastic environment or cdn.

Last words

Returning to the agile metaphor, I hope it is clear to any SCRUM team that “Product Owner” is a synonym for “Mr/Ms Clarity”. A PO should have his requirements crystal clear before handing them over to his development teams for implementation. Also, to avoid surprises at the end of a sprint, a PO needs a clear, live measure of how the sprint is going. And a sprint burndown chart means absolutely nothing about how much working software the team has produced. Of course, my suggestion won’t prevent failures all the time, but it will at least help to reduce failures and make stakeholders happier.

On the other hand, when newcomers join your development team and you want them to be productive immediately, you can treat Gherkin’s features and corresponding milestone implementations as a kind of “do-it-yourself” with high-level guidance. When they run the feature tests, they get autonomous feedback on the status of their implementation. The overall architecture has already been defined, the newcomer only has to fill in the gaps with implementation details. The same applies when you have to delegate the development of software to a third-party company. Instead of writing long Word documents, you could try the methodology described in this post. Code does not lie and cannot be misinterpreted. Word documents can be, especially when you are, for example, a Swiss company writing documents in English for a company in the Czech Republic. In this case, even if everyone has a good level of English, cultural contexts sometimes make it difficult for people from different countries to understand each other clearly, turning the delegation of software development into a game of Chinese whispers.